您好!

欢迎来到京东云开发者社区

登录

首页

博文

课程

大赛

工具

用户中心

开源

首页

博文

课程

大赛

工具

开源

更多

用户中心

开发者社区

>

博文

>

手把手教你一套完善且高效的k8s离线部署方案

分享

打开微信扫码分享

点击前往QQ分享

点击前往微博分享

点击复制链接

手把手教你一套完善且高效的k8s离线部署方案

京东云开发者

2023-12-11

IP归属:北京

30457浏览

K8s

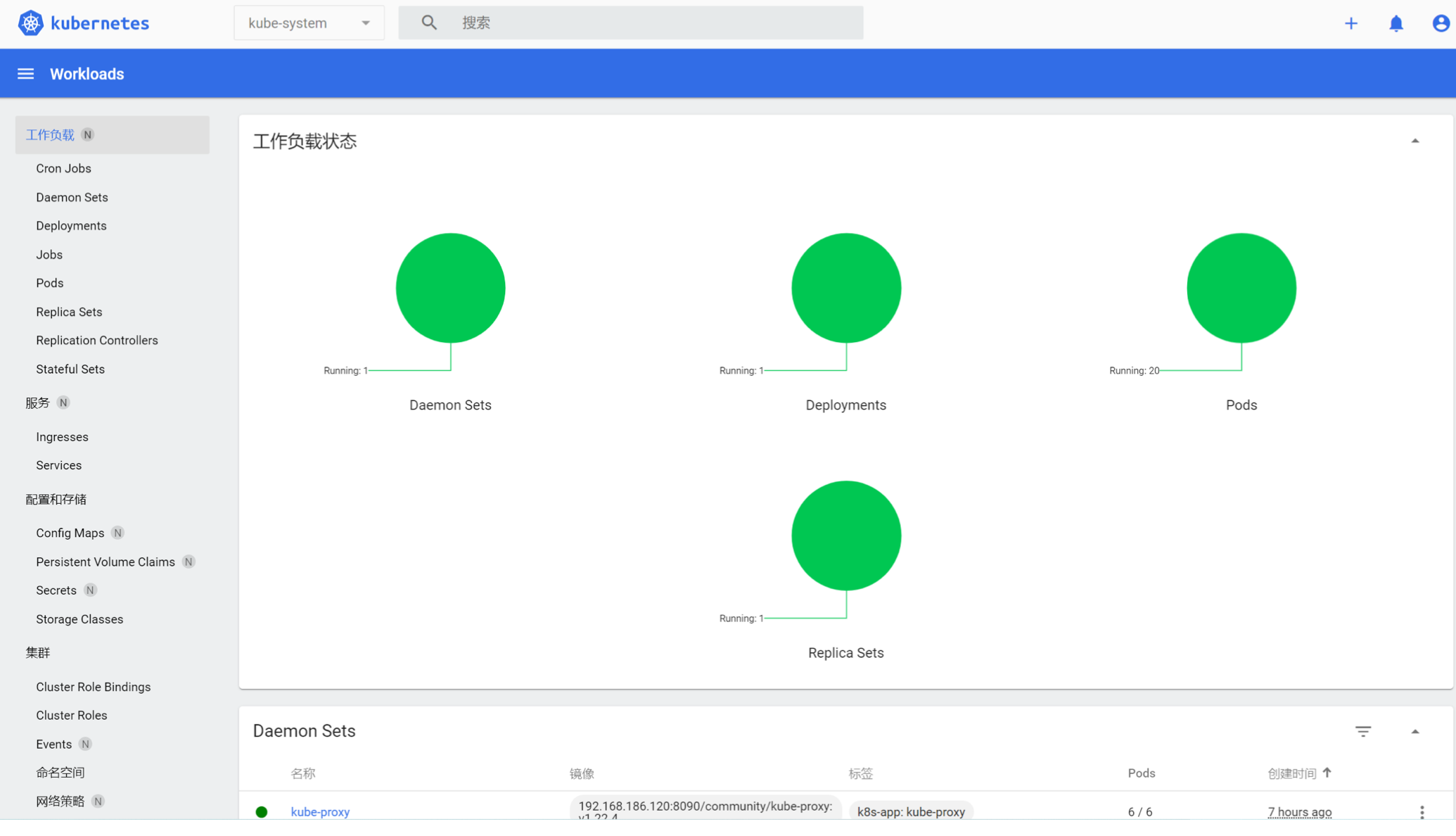

<p># 背景 面对更多项目现场交付,偶而会遇到客户环境不具备公网条件,完全内网部署,这就需要有一套完善且高效的离线部署方案。 # 系统资源 | 编号 | 主机名称 | IP | 资源类型 | CPU | 内存 | 磁盘 | | --- | --- | --- | --- | --- | --- | --- | | 01 | k8s-master1 | 10.132.10.91 | CentOS-7 | 4c | 8g | 40g | | 02 | k8s-master1 | 10.132.10.92 | CentOS-7 | 4c | 8g | 40g | | 03 | k8s-master1 | 10.132.10.93 | CentOS-7 | 4c | 8g | 40g | | 04 | k8s-worker1 | 10.132.10.94 | CentOS-7 | 8c | 16g | 200g | | 05 | k8s-worker2 | 10.132.10.95 | CentOS-7 | 8c | 16g | 200g | | 06 | k8s-worker3 | 10.132.10.96 | CentOS-7 | 8c | 16g | 200g | | 07 | k8s-worker4 | 10.132.10.97 | CentOS-7 | 8c | 16g | 200g | | 08 | k8s-worker5 | 10.132.10.98 | CentOS-7 | 8c | 16g | 200g | | 09 | k8s-worker6 | 10.132.10.99 | CentOS-7 | 8c | 16g | 200g | | 10 | k8s-harbor&deploy | 10.132.10.100 | CentOS-7 | 4c | 8g | 500g | | 11 | k8s-nfs | 10.132.10.101 | CentOS-7 | 2c | 4g | 2000g | | 12 | k8s-lb | 10.132.10.120 | lb内网 | 2c | 4g | 40g | # 参数配置 注:在全部节点执行以下操作 ## 系统基础设置 工作、日志及数据存储目录设定 ``` $ mkdir -p /export/servers $ mkdir -p /export/logs $ mkdir -p /export/data $ mkdir -p /export/upload ``` 内核及网络参数优化 ``` $ vim /etc/sysctl.conf # 设置以下内容 fs.file-max = 1048576 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_fin_timeout = 5 net.ipv4.neigh.default.gc_stale_time = 120 net.ipv4.conf.default.rp_filter = 0 net.ipv4.conf.all.rp_filter = 0 net.ipv4.conf.all.arp_announce = 2 net.ipv4.conf.lo.arp_announce = 2 vm.max_map_count = 262144 # 及时生效 sysctl -w vm.max_map_count=262144 ``` ### ulimit优化 ``` $ vim /etc/security/limits.conf # 设置以下内容 * soft memlock unlimited * hard memlock unlimited * soft nproc 102400 * hard nproc 102400 * soft nofile 1048576 * hard nofile 1048576 ``` # 基础环境准备 ## ansible安装 ### **1.** **环境说明** | **名称** | **说明** | | --- | --- | | 操作系统 | CentOS Linux release 7.8.2003 | | ansible | 2.9.27 | | 节点 | deploy | ### **2.** **部署说明** 物联管理平台机器数量繁多,需要ansible进行批量操作机器,节省时间,需要从部署节点至其他节点root免密。 ``` 注:在不知道root密码情况下,可以手动操作名密,按以下操作步骤执行: # 需要在部署机器上执行以下命令生成公钥 $ ssh-keygen -t rsa # 复制~/.ssh/id_rsa.pub内容,并粘贴至其他节点~/.ssh/authorized_keys文件里面 # 如果没有authorized_keys文件,可先执行创建创建在进行粘贴操作 $ touch ~/.ssh/authorized_keys ``` ### **3\. 部署步骤** **1)** **在线安装** ``` $ yum -y install https://releases.ansible.com/ansible/rpm/release/epel-7-x86_64/ansible-2.9.27-1.el7.ans.noarch.rpm ``` **2)** **离线安装** ``` # 提前上传ansible及所有依赖rpm包,并切换至rpm包目录 $ yum -y ./*rpm ``` **3)** **查看版本** ``` $ ansible --version ansible 2.9.27 config file = /etc/ansible/ansible.cfg configured module search path = [u'/root/.ansible/plugins/modules', u'/usr/share/ansible/plugins/modules'] ansible python module location = /usr/lib/python2.7/site-packages/ansible executable location = /usr/bin/ansible python version = 2.7.5 (default, Apr 2 2020, 13:16:51) [GCC 4.8.5 20150623 (Red Hat 4.8.5-39)] ``` **4)** **设置管理主机列表** ``` $ vim /etc/ansible/hosts [master] 10.132.10.91 node_name=k8s-master1 10.132.10.92 node_name=k8s-master2 10.132.10.93 node_name=k8s-master3 [worker] 10.132.10.94 node_name=k8s-worker1 10.132.10.95 node_name=k8s-worker2 10.132.10.96 node_name=k8s-worker3 10.132.10.97 node_name=k8s-worker4 10.132.10.98 node_name=k8s-worker5 10.132.10.99 node_name=k8s-worker6 [etcd] 10.132.10.91 etcd_name=etcd1 10.132.10.92 etcd_name=etcd2 10.132.10.93 etcd_name=etcd3 [k8s:children] master worker ``` **5)** **禁用ssh主机检查** ``` $ vi /etc/ansible/ansible.cfg # 修改以下设置 # uncomment this to disable SSH key host checking host_key_checking = False ``` **6)** **取消SELINUX设定及放开防火墙** ``` $ ansible k8s -m command -a "setenforce 0" $ ansible k8s -m command -a "sed --follow-symlinks -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config" $ ansible k8s -m command -a "firewall-cmd --set-default-zone=trusted" $ ansible k8s -m command -a "firewall-cmd --complete-reload" $ ansible k8s -m command -a "swapoff -a" ``` **7)hosts设置** ``` $ cd /export/upload && vim hosts_set.sh #设置以下脚本内容 #!/bin/bashcat > /etc/hosts << EOF 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.132.10.100 deploy harbor 10.132.10.91 master01 10.132.10.92 master02 10.132.10.93 master03 10.132.10.94 worker01 10.132.10.95 worker02 10.132.10.96 worker03 10.132.10.97 worker04 10.132.10.98 worker05 10.132.10.99 worker06 EOF $ ansible new_worker -m copy -a 'src=/export/upload/hosts_set.sh dest=/export/upload' $ ansible new_worker -m command -a 'sh /export/upload/hosts_set.sh' ``` ## docker安装 ### **1\. 环境说明** | **名称** | **说明** | | --- | --- | | **操作系统** | CentOS Linux release 7.8.2003 | | **docker** | docker-ce-20.10.17 | | **节点** | deploy | ### **2\. 部署说明** ### **3\. 部署方法** **1)** **在线安装** ``` $ yum -y install docker-ce-20.10.17 ``` **2)** **离线安装** ``` # 提前上传docker及所有依赖rpm包,并切换至rpm包目录 $ yum -y ./*rpm ``` **3)** **重新加载配置文件,启动并查看状态** ``` $ systemctl start docker $ systemctl status docker ``` **4)** **设置开机自启** ``` $ systemctl enable docker ``` **5)** **查看版本** ``` $ docker version Client: Docker Engine - Community Version: 20.10.17 API version: 1.41 Go version: go1.17.11 Git commit: 100c701 Built: Mon Jun 6 23:05:12 2022 OS/Arch: linux/amd64 Context: default Experimental: true Server: Docker Engine - Community Engine: Version: 20.10.17 API version: 1.41 (minimum version 1.12) Go version: go1.17.11 Git commit: a89b842 Built: Mon Jun 6 23:03:33 2022 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.6.8 GitCommit: 9cd3357b7fd7218e4aec3eae239db1f68a5a6ec6 runc: Version: 1.1.4 GitCommit: v1.1.4-0-g5fd4c4d docker-init: Version: 0.19.0 GitCommit: de40ad0 ``` ## docker-compose安装 ### **1\. 环境说明** | **名称** | **说明** | | --- | --- | | **操作系统** | CentOS Linux release 7.8.2003 | | **docker-compose** | docker-compose-linux-x86_64 | | **节点** | deploy | ### **2\. 部署说明** harbor私有镜像库依赖。 ### **3\. 部署方法** **1)** **下载docker-compose并上传至服务器** ``` $ curl -L https://github.com/docker/compose/releases/download/v2.9.0/docker-compose-linux-x86_64 -o docker-compose ``` **2)** **修改docker-compose执行权限** ``` $ mv docker-compose /usr/local/bin/ $ chmod +x /usr/local/bin/docker-compose $ docker-compose version ``` **3)** **查看版本** ``` $ docker-compose version Docker Compose version v2.9.0 ``` ## harbor安装 ### **1\. 环境说明** | **名称** | **说明** | | --- | --- | | **操作系统** | CentOS Linux release 7.8.2003 | | **harbor** | harbor-offline-installer-v2.4.3 | | **节点** | harbor | ### **2\. 部署说明** 私有镜像仓库。 ### **3\. 下载harbor离线安装包并上传至服务器** ``` $ wget https://github.com/goharbor/harbor/releases/download/v2.4.3/harbor-offline-installer-v2.4.3.tgz ``` ### **4\. 解压安装包** ``` $ tar -xzvf harbor-offline-installer-v2.4.3.tgz -C /export/servers/ $ cd /export/servers/harbor ``` ### **5\. 修改配置文件** ``` $ mv harbor.yml.tmpl harbor.yml $ vim harbor.yml ``` ### **6\. 设置以下内容** ``` hostname: 10.132.10.100 http.port: 8090 data_volume: /export/data/harbor log.location: /export/logs/harbor ``` ### **7\. 导入harbor镜像** ``` $ docker load -i harbor.v2.4.3.tar.gz # 等待导入harbor依赖镜像文件 $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE goharbor/harbor-exporter v2.4.3 776ac6ee91f4 4 weeks ago 81.5MB goharbor/chartmuseum-photon v2.4.3 f39a9694988d 4 weeks ago 172MB goharbor/redis-photon v2.4.3 b168e9750dc8 4 weeks ago 154MB goharbor/trivy-adapter-photon v2.4.3 a406a715461c 4 weeks ago 251MB goharbor/notary-server-photon v2.4.3 da89404c7cf9 4 weeks ago 109MB goharbor/notary-signer-photon v2.4.3 38468ac13836 4 weeks ago 107MB goharbor/harbor-registryctl v2.4.3 61243a84642b 4 weeks ago 135MB goharbor/registry-photon v2.4.3 9855479dd6fa 4 weeks ago 77.9MB goharbor/nginx-photon v2.4.3 0165c71ef734 4 weeks ago 44.4MB goharbor/harbor-log v2.4.3 57ceb170dac4 4 weeks ago 161MB goharbor/harbor-jobservice v2.4.3 7fea87c4b884 4 weeks ago 219MB goharbor/harbor-core v2.4.3 d864774a3b8f 4 weeks ago 197MB goharbor/harbor-portal v2.4.3 85f00db66862 4 weeks ago 53.4MB goharbor/harbor-db v2.4.3 7693d44a2ad6 4 weeks ago 225MB goharbor/prepare v2.4.3 c882d74725ee 4 weeks ago 268MB ``` ### **8\. 启动harbor** ``` ./prepare # 如果有二次修改harbor.yml文件,请执行使配置文件生效 ./install.sh --help # 查看启动参数 ./install.sh --with-chartmuseum ``` # 运行环境搭建 ## docker安装 ### **1\. 环境说明** | **名称** | **说明** | | --- | --- | | **操作系统** | CentOS Linux release 7.8.2003 | | **docker** | docker-ce-20.10.17 | | **节点** | k8s集群全部节点 | ### **2\. 部署说明** k8s容器运行环境docker部署 ### **3\. 部署方法** **1)** **上传docker及依赖rpm包** ``` $ ls /export/upload/docker-rpm.tgz ``` **2)** **分发安装包** ``` $ ansible k8s -m copy -a "src=/export/upload/docker-rpm.tgz dest=/export/upload/" # 全部节点返回以下信息 CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "acd3897edb624cd18a197bcd026e6769797f4f05", "dest": "/export/upload/docker-rpm.tgz", "gid": 0, "group": "root", "md5sum": "3ba6d9fe6b2ac70860b6638b88d3c89d", "mode": "0644", "owner": "root", "secontext": "system_u:object_r:usr_t:s0", "size": 103234394, "src": "/root/.ansible/tmp/ansible-tmp-1661836788.82-13591-17885284311930/source", "state": "file", "uid": 0 } ``` **3)** **执行解压并安装** ``` $ ansible k8s -m shell -a "tar xzvf /export/upload/docker-rpm.tgz -C /export/upload/ && yum -y install /export/upload/docker-rpm/*" ``` **4)** **设置开机自启并启动** ``` $ ansible k8s -m shell -a "systemctl enable docker && systemctl start docker" ``` **5)** **查看版本** ``` $ ansible k8s -m shell -a "docker version" # 全部节点返回以下信息 CHANGED | rc=0 >> Client: Docker Engine - Community Version: 20.10.17 API version: 1.41 Go version: go1.17.11 Git commit: 100c701 Built: Mon Jun 6 23:05:12 2022 OS/Arch: linux/amd64 Context: default Experimental: true Server: Docker Engine - Community Engine: Version: 20.10.17 API version: 1.41 (minimum version 1.12) Go version: go1.17.11 Git commit: a89b842 Built: Mon Jun 6 23:03:33 2022 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.6.8 GitCommit: 9cd3357b7fd7218e4aec3eae239db1f68a5a6ec6 runc: Version: 1.1.4 GitCommit: v1.1.4-0-g5fd4c4d docker-init: Version: 0.19.0 GitCommit: de40ad0 ``` ## kubernetes安装 **有网环境安装** ``` # 添加阿里云YUM的软件源: cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF ``` **下载离线安装包** ``` # 创建rpm软件存储目录: mkdir -p /export/download/kubeadm-rpm # 执行命令: yum install -y kubelet-1.22.4 kubeadm-1.22.4 kubectl-1.22.4 --downloadonly --downloaddir /export/download/kubeadm-rpm ``` **无网环境安装** **1)** **上传kubeadm及依赖rpm包** ``` $ ls /export/upload/ kubeadm-rpm.tgz ``` **2)** **分发安装包** ``` $ ansible k8s -m copy -a "src=/export/upload/kubeadm-rpm.tgz dest=/export/upload/" # 全部节点返回以下信息 CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "3fe96fe1aa7f4a09d86722f79f36fb8fde69facb", "dest": "/export/upload/kubeadm-rpm.tgz", "gid": 0, "group": "root", "md5sum": "80d5bda420db6ea23ad75dcf0f76e858", "mode": "0644", "owner": "root", "secontext": "system_u:object_r:usr_t:s0", "size": 67423355, "src": "/root/.ansible/tmp/ansible-tmp-1661840257.4-33361-139823848282879/source", "state": "file", "uid": 0 } ``` **3)** **执行解压并安装** ``` $ ansible k8s -m shell -a "tar xzvf /export/upload/kubeadm-rpm.tgz -C /export/upload/ && yum -y install /export/upload/kubeadm-rpm/*" ``` **4)** **设置开机自启并启动** ``` $ ansible k8s -m shell -a "systemctl enable kubelet && systemctl start kubelet" 注:此时kubelet启动失败,会进入不断重启,这个是正常现象,执行init或join后问题会自动解决,对此官网有如下描述,也就是此时不用理会kubelet.service,可执行发下命令查看kubelet状态。 $ journalctl -xefu kubelet ``` **4)** **分发依赖镜像至集群节点** ``` # 可以在有公网环境提前下载镜像 $ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.4 $ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.0-0 $ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.22.4 $ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.22.4 $ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.22.4 $ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.22.4 $ docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5 $ docker pull rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0 $ docker pull rancher/mirrored-flannelcni-flannel:v0.19.1 # 导出镜像文件,上传部署节点并导入镜像库 $ ls /export/upload $ docker load -i google_containers-coredns-v1.8.4.tar $ docker load -i google_containers-etcd:3.5.0-0.tar $ docker load -i google_containers-kube-apiserver:v1.22.4.tar $ docker load -i google_containers-kube-controller-manager-v1.22.4.tar $ docker load -i google_containers-kube-proxy-v1.22.4.tar $ docker load -i google_containers-kube-scheduler-v1.22.4.tar $ docker load -i google_containers-pause-3.5.tar $ docker load -i rancher-mirrored-flannelcni-flannel-cni-plugin-v1.1.0.tar $ docker load -i rancher-mirrored-flannelcni-flannel-v0.19.1.tar # 镜像打harbor镜像库tag $ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.4 10.132.10.100:8090/community/coredns:v1.8.4 $ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.0-0 10.132.10.100:8090/community/etcd:3.5.0-0 $ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.22.4 10.132.10.100:8090/community/kube-apiserver:v1.22.4 $ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.22.4 10.132.10.100:8090/community/kube-controller-manager:v1.22.4 $ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.22.4 10.132.10.100:8090/community/kube-proxy:v1.22.4 $ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.22.4 10.132.10.100:8090/community/kube-scheduler:v1.22.4 $ docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5 10.132.10.100:8090/community/pause:3.5 $ docker tag rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0 10.132.10.100:8090/community/mirrored-flannelcni-flannel-cni-plugin:v1.1.0 $ docker tag rancher/mirrored-flannelcni-flannel:v0.19.1 10.132.10.100:8090/community/mirrored-flannelcni-flannel:v0.19.1 # 推送至harbor镜像库 $ docker push 192.168.186.120:8090/community/coredns:v1.8.4 $ docker push 192.168.186.120:8090/community/etcd:3.5.0-0 $ docker push 192.168.186.120:8090/community/kube-apiserver:v1.22.4 $ docker push 192.168.186.120:8090/community/kube-controller-manager:v1.22.4 $ docker push 192.168.186.120:8090/community/kube-proxy:v1.22.4 $ docker push 192.168.186.120:8090/community/kube-scheduler:v1.22.4 $ docker push 192.168.186.120:8090/community/pause:3.5 $ docker push 192.168.186.120:8090/community/mirrored-flannelcni-flannel-cni-plugin:v1.1.0 $ docker push 192.168.186.120:8090/community/mirrored-flannelcni-flannel:v0.19.1 ``` **5)部署首个master** ``` $ kubeadm init \ --control-plane-endpoint "10.132.10.91:6443" \ --image-repository 10.132.10.100/community \ --kubernetes-version v1.22.4 \ --service-cidr=172.16.0.0/16 \ --pod-network-cidr=10.244.0.0/16 \ --token "abcdef.0123456789abcdef" \ --token-ttl "0" \ --upload-certs # 显示以下信息 [init] Using Kubernetes version: v1.22.4 [preflight] Running pre-flight checks [WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master01] and IPs [172.16.0.1 10.132.10.91] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [localhost master01] and IPs [10.132.10.91 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [localhost master01] and IPs [10.132.10.91 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 11.008638 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 9151ea7bb260a42297f2edc486d5792f67d9868169310b82ef1eb18f6e4c0f13 [mark-control-plane] Marking the node master01 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node master01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: abcdef.0123456789abcdef [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 10.132.10.91:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4884a98b0773bc89c36dc5fa51569293103ff093e9124431c4c8c2d5801a96a2 \ --control-plane --certificate-key 9151ea7bb260a42297f2edc486d5792f67d9868169310b82ef1eb18f6e4c0f13 Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.132.10.91:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4884a98b0773bc89c36dc5fa51569293103ff093e9124431c4c8c2d5801a96a2 ``` **6)生成kubelet环境配置文件** ``` # 执行命令 $ mkdir -p $HOME/.kube $ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config $ sudo chown $(id -u):$(id -g) $HOME/.kube/config ``` **7)配置网络插件flannel** ``` # 创建flannel.yml文件 $ touch /export/servers/kubernetes/flannel.yml $ vim /export/servers/kubernetes/flannel.yml # 设置以下内容,需要关注有网无网时对应的地址切换 --- kind: Namespace apiVersion: v1 metadata: name: kube-flannel labels: pod-security.kubernetes.io/enforce: privileged --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: flannel rules: - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-flannel --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-flannel --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-flannel labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds namespace: kube-flannel labels: tier: node app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux hostNetwork: true priorityClassName: system-node-critical tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni-plugin # 在有网环境下可以切换下面地址 # image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0 # 在无网环境下需要使用私有harbor地址 image: 10.132.10.100:8090/community/mirrored-flannelcni-flannel-cni-plugin:v1.1.0 command: - cp args: - -f - /flannel - /opt/cni/bin/flannel volumeMounts: - name: cni-plugin mountPath: /opt/cni/bin - name: install-cni # 在有网环境下可以切换下面地址 # image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1 # 在无网环境下需要使用私有harbor地址 image: 10.132.10.100:8090/community/mirrored-flannelcni-flannel:v0.19.1 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel # 在有网环境下可以切换下面地址 # image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1 # 在无网环境下需要使用私有harbor地址 image: 10.132.10.100:8090/community/mirrored-flannelcni-flannel:v0.19.1 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN", "NET_RAW"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: EVENT_QUEUE_DEPTH value: "5000" volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ - name: xtables-lock mountPath: /run/xtables.lock volumes: - name: run hostPath: path: /run/flannel - name: cni-plugin hostPath: path: /opt/cni/bin - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg - name: xtables-lock hostPath: path: /run/xtables.lock type: FileOrCreate ``` **8)安装网络插件flannel** ``` # 生效yml配置文件 $ kubectl apply -f kube-flannel.yml # 查看pods状态 $ kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-flannel kube-flannel-ds-kjmt4 1/1 Running 0 148m kube-system coredns-7f84d7b4b5-7qr8g 1/1 Running 0 4h18m kube-system coredns-7f84d7b4b5-fljws 1/1 Running 0 4h18m kube-system etcd-master01 1/1 Running 0 4h19m kube-system kube-apiserver-master01 1/1 Running 0 4h19m kube-system kube-controller-manager-master01 1/1 Running 0 4h19m kube-system kube-proxy-wzq2t 1/1 Running 0 4h18m kube-system kube-scheduler-master01 1/1 Running 0 4h19m ``` **9)加入其他master节点** ``` # 在master01执行如下操作 # 查看token列表 $ kubeadm token list # master01执行init操作后生成加入命令如下 $ kubeadm join 10.132.10.91:6443 \ --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4884a98b0773bc89c36dc5fa51569293103ff093e9124431c4c8c2d5801a96a2 \ --control-plane --certificate-key 9151ea7bb260a42297f2edc486d5792f67d9868169310b82ef1eb18f6e4c0f13 # 在其他master节点执行如下操作 # 分别执行上一步的加入命令,加入master节点至集群 $ kubeadm join 10.132.10.91:6443 \ --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4884a98b0773bc89c36dc5fa51569293103ff093e9124431c4c8c2d5801a96a2 \ --control-plane --certificate-key 9151ea7bb260a42297f2edc486d5792f67d9868169310b82ef1eb18f6e4c0f13 # 此处如果报错,一般是certificate-key过期,可以在master01执行如下命令更新 $ kubeadm init phase upload-certs --upload-certs 3b647155b06311d39faf70cb094d9a5e102afd1398323e820cfb3cfd868ae58f # 将上面生成的值替换certificate-key值再次在其他master节点执行如下命令 $ kubeadm join 10.132.10.91:6443 \ --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4884a98b0773bc89c36dc5fa51569293103ff093e9124431c4c8c2d5801a96a2 --control-plane --certificate-key 3b647155b06311d39faf70cb094d9a5e102afd1398323e820cfb3cfd868ae58f # 生成kubelet环境配置文件 $ mkdir -p $HOME/.kube $ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config $ sudo chown $(id -u):$(id -g) $HOME/.kube/config # 在任意master节点执行查看节点状态命令 $ kubectl get nodes NAME STATUS ROLES AGE VERSION master01 Ready control-plane,master 5h58m v1.22.4 master02 Ready control-plane,master 45m v1.22.4 master03 Ready control-plane,master 44m v1.22.4 ``` **9)加入worker节点** ``` # 在其他worker节点执行master01执行init操作后生成的加入命令如下 # 分别执行上一步的加入命令,加入master节点至集群 $ kubeadm join 10.132.10.91:6443 \ --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4884a98b0773bc89c36dc5fa51569293103ff093e9124431c4c8c2d5801a96a2 # 此处如果报错,一般是token过期,可以在master01执行如下命令重新生成加入命令 $ kubeadm token create --print-join-command kubeadm join 10.132.10.91:6443 \ --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:cf30ddd3df1c6215b886df1ea378a68ad5a9faad7933d53ca9891ebbdf9a1c3f # 将上面生成的加入命令再次在其他worker节点执行 # 查看集成状态 $ kubectl get nodes NAME STATUS ROLES AGE VERSION master01 Ready control-plane,master 6h12m v1.22.4 master02 Ready control-plane,master 58m v1.22.4 master03 Ready control-plane,master 57m v1.22.4 worker01 Ready</p><none>5m12s v1.22.4 worker02 Ready<none>4m10s v1.22.4 worker03 Ready<none>3m42s v1.22.4 ``` **10)配置kubernetes dashboard** ``` apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort ports: - port: 443 targetPort: 8443 nodePort: 31001 selector: k8s-app: kubernetes-dashboard --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard type: Opaque --- kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster", "dashboard-metrics-scraper"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"] verbs: ["get"] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: # Allow Metrics Scraper to get metrics from the Metrics server - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: securityContext: seccompProfile: type: RuntimeDefault containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.5.0 imagePullPolicy: Always ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --namespace=kubernetes-dashboard # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: dashboard-metrics-scraper --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper spec: securityContext: seccompProfile: type: RuntimeDefault containers: - name: dashboard-metrics-scraper image: kubernetesui/metrics-scraper:v1.0.7 ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - mountPath: /tmp name: tmp-volume securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule volumes: - name: tmp-volume emptyDir: {} ``` **11)生成dashboard自签证书** ``` $ mkdir -p /export/servers/kubernetes/certs && cd /export/servers/kubernetes/certs/ $ openssl genrsa -out dashboard.key 2048 $ openssl req -days 3650 -new -key dashboard.key -out dashboard.csr -subj /C=CN/ST=BEIJING/L=BEIJING/O=JD/OU=JD/CN=172.16.16.42 $ openssl x509 -req -days 3650 -in dashboard.csr -signkey dashboard.key -out dashboard.crt ``` **12)执行以下操作命令** ``` # 去除主节点的污点 $ kubectl taint nodes --all node-role.kubernetes.io/master- # 创建命名空间 $ kubectl create namespace kubernetes-dashboard # 创建Secret $ kubectl create secret tls kubernetes-dashboard-certs -n kubernetes-dashboard --key dashboard.key \ --cert dashboard.crt ``` **13)生效dashboard yml配置文件** ``` $ kubectl apply -f /export/servers/kubernetes/dashboard.yml # 查看pods状态 $ kubectl get pods -A | grep kubernetes-dashboard kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-rbdt4 1/1 Running 0 15m kubernetes-dashboard kubernetes-dashboard-764b4dd7-rt66t 1/1 Running 0 15m ``` **14)访问dashboard页面** ``` # web浏览器访问地址:IP地址为集群任意节点(可以是LB地址)https://192.168.186.121:31001/#/login ``` **15)制作访问token** ``` # 新增配置文件 dashboard-adminuser.yaml $ touch /export/servers/kubernetes/dashboard-adminuser.yaml && vim /export/servers/kubernetes/dashboard-adminuser.yaml # 输入以下内容 --- apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard # 执行yaml文件 $ kubectl create -f /export/servers/kubernetes/dashboard-adminuser.yaml # 预期输出结果 serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user created # 说明:上面创建了一个叫admin-user的服务账号,并放在kubernetes-dashboard命名空间下,并将cluster-admin角色绑定到admin-user账户,这样admin-user账户就有了管理员的权限。默认情况下,kubeadm创建集群时已经创建了cluster-admin角色,直接绑定即可 # 查看admin-user账户的token $ kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}') # 预期输出结果 Name: admin-user-token-9fpps Namespace: kubernetes-dashboard Labels:<none>Annotations: kubernetes.io/service-account.name: admin-user kubernetes.io/service-account.uid: 72c1aa28-6385-4d1a-b22c-42427b74b4c7 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1099 bytes namespace: 20 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjFEckU0NXB5Yno5UV9MUFkxSUpPenJhcTFuektHazM1c2QzTGFmRzNES0EifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTlmcHBzIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI3MmMxYWEyOC02Mzg1LTRkMWEtYjIyYy00MjQyN2I3NGI0YzciLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.oA3NLhhTaXd2qvWrPDXat2w9ywdWi_77SINk4vWkfIIzMmxBEHnqvDIBvhRC3frIokNSvT71y6mXN0KHu32hBa1YWi0MuzF165ZNFtM_rSQiq9OnPxeFvLaKS-0Vzr2nWuBx_-fTt7gESReSMLEJStbPb1wOnR6kqtY66ajKK5ILeIQ77I0KXYIi7GlPEyc6q4bIjweZ0HSXDPR4JSnEAhrP8Qslrv3Oft4QZVNj47x7xKC4dyyZOMHUIj9QhkpI2gMbiZ8XDUmNok070yDc0TCxeTZKDuvdsigxCMQx6AesD-8dca5Hb8Sm4mEPkGJekvMzkLkM97y_pOBPkfTAIA # 把上面命令执行获取到的Token复制到登录界面的Token输入框中,即可正常登录dashboard ``` **13)登录dashboard如下**  ### kubectl安装 **1\. 环境说明** | **名称** | **说明** | | --- | --- | | **操作系统** | CentOS Linux release 7.8.2003 | | **kubectl** | kubectl-1.22.4-0.x86_64 | | **节点** | deploy | **2\. 部署说明** Kubernetes kubectl客户端。 **3\. 解压之前上传的kubadm-rpm包** ``` $ tar xzvf kubeadm-rpm.tgz ``` **4\. 执行安装** ``` $ rpm -ivh bc7a9f8e7c6844cfeab2066a84b8fecf8cf608581e56f6f96f80211250f9a5e7-kubectl-1.22.4-0.x86_64.rpm ``` **5\. 增加执行权限** ``` # 生成kubelet环境配置文件 $ mkdir -p $HOME/.kube $ sudo touch $HOME/.kube/config $ sudo chown $(id -u):$(id -g) $HOME/.kube/config # 从任意master节点复制内容至上面的配置文件 ``` **6\. 查看版本** ``` $ kubectl version Client Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.4", GitCommit:"b695d79d4f967c403a96986f1750a35eb75e75f1", GitTreeState:"clean", BuildDate:"2021-11-17T15:48:33Z", GoVersion:"go1.16.10", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.4", GitCommit:"b695d79d4f967c403a96986f1750a35eb75e75f1", GitTreeState:"clean", BuildDate:"2021-11-17T15:42:41Z", GoVersion:"go1.16.10", Compiler:"gc", Platform:"linux/amd64"} ``` ## helm安装 **1\. 环境说明** | **名称** | **说明** | | --- | --- | | **操作系统** | CentOS Linux release 7.8.2003 | | **helm** | helm-v3.9.3-linux-amd64.tar.gz | | **节点** | deploy | **2\. 部署说明** Kubernetes资源包及配置管理工具。 **3\. 下载helm离线安装包并上传至服务器** ``` $ wget https://get.helm.sh/helm-v3.9.3-linux-amd64.tar.gz ``` **4\. 解压安装包** ``` $ tar -zxvf helm-v3.9.3-linux-amd64.tar.gz -C /export/servers/ $ cd /export/servers/linux-amd64 ``` **5\. 增加执行权限** ``` $ cp linux-amd64/helm /usr/local/bin/ $ chmod +x /usr/local/bin/helm ``` **6\. 查看版本** ``` $ helm version version.BuildInfo{Version:"v3.9.3", GitCommit:"414ff28d4029ae8c8b05d62aa06c7fe3dee2bc58", GitTreeState:"clean", GoVersion:"go1.17.13"} ``` **设置本地存储挂载nas** ``` $ mkdir /export/servers/helm_chart/local-path-storage && cd /export/servers/helm_chart/local-path-storage/local-path-storage.yaml $ vim local-path-storage.yaml # 设置以下内容,设置"paths":["/home/admin/local-path-provisioner"] 为nas目录,没有目录需要创建 apiVersion: v1 kind: Namespace metadata: name: local-path-storage --- apiVersion: v1 kind: ServiceAccount metadata: name: local-path-provisioner-service-account namespace: local-path-storage --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: local-path-provisioner-role rules: - apiGroups: [ "" ] resources: [ "nodes", "persistentvolumeclaims", "configmaps" ] verbs: [ "get", "list", "watch" ] - apiGroups: [ "" ] resources: [ "endpoints", "persistentvolumes", "pods" ] verbs: [ "*" ] - apiGroups: [ "" ] resources: [ "events" ] verbs: [ "create", "patch" ] - apiGroups: [ "storage.k8s.io" ] resources: [ "storageclasses" ] verbs: [ "get", "list", "watch" ] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: local-path-provisioner-bind roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: local-path-provisioner-role subjects: - kind: ServiceAccount name: local-path-provisioner-service-account namespace: local-path-storage --- apiVersion: apps/v1 kind: Deployment metadata: name: local-path-provisioner namespace: local-path-storage spec: replicas: 1 selector: matchLabels: app: local-path-provisioner template: metadata: labels: app: local-path-provisioner spec: serviceAccountName: local-path-provisioner-service-account containers: - name: local-path-provisioner image: rancher/local-path-provisioner:v0.0.21 imagePullPolicy: IfNotPresent command: - local-path-provisioner - --debug - start - --config - /etc/config/config.json volumeMounts: - name: config-volume mountPath: /etc/config/ env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumes: - name: config-volume configMap: name: local-path-config --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: local-path provisioner: rancher.io/local-path volumeBindingMode: WaitForFirstConsumer reclaimPolicy: Delete --- kind: ConfigMap apiVersion: v1 metadata: name: local-path-config namespace: local-path-storage data: config.json: |- { "nodePathMap":[ { "node":"DEFAULT_PATH_FOR_NON_LISTED_NODES", "paths":["/nas_data/jdiot/local-path-provisioner"] } ] } setup: |- #!/bin/sh while getopts "m:s:p:" opt do case $opt in p) absolutePath=$OPTARG ;; s) sizeInBytes=$OPTARG ;; m) volMode=$OPTARG ;; esac done mkdir -m 0777 -p ${absolutePath} teardown: |- #!/bin/sh while getopts "m:s:p:" opt do case $opt in p) absolutePath=$OPTARG ;; s) sizeInBytes=$OPTARG ;; m) volMode=$OPTARG ;; esac done rm -rf ${absolutePath} helperPod.yaml: |- apiVersion: v1 kind: Pod metadata: name: helper-pod spec: containers: - name: helper-pod image: busybox ``` 注:以上依赖镜像需要从公网环境下载依赖并导入镜像库,需要设置以上对应镜像地址从私有镜像库拉取镜像 **生效本地存储yaml** ``` $ kubectl apply -f local-path-storage.yaml -n local-path-storage ``` **设置k8s默认存储** ``` $ kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' ``` 注:后面部署的中间件及服务需要修改对应的存储为本地存储:"storageClass": "local-path" #### 作者:郝建伟</none></none></none></none>

原创文章,需联系作者,授权转载

上一篇:【稳定性】浅谈11.11大促之预案演练

下一篇:【羚珑AI智绘营】新一代“垫图”神器,IP-Adapter的完整应用解读

相关文章

手把手教你一套完善且高效的k8s离线部署方案

一次客户需求引发的K8S网络探究(上)

一次客户需求引发的K8S网络探究(下)

京东云开发者

文章数

122

阅读量

526278

作者其他文章

01

安全测试之探索windows游戏扫雷

扫雷游戏相信很多人都从小玩过,在那个电脑游戏并不多的时代,扫雷成为玩的热度蛮高的一款游戏之一,然而就在有一次,接触到了一次不寻常的扫雷过程,使得后来我也有了这个冲动,也来做一次。通过动态调试,逆向和C来写一个扫雷辅助工具从而提高逆向与编码技能。

01

DeepSeek-R1原理解析及项目实践(含小白向概念解读)

01

京东智能体引擎AutoBots(JoyAgent)-多智能体引擎Genie正式开源

01

京东金融APP的鸿蒙之旅:技术、挑战与实践

京东云开发者

文章数

122

阅读量

526278

作者其他文章

01

安全测试之探索windows游戏扫雷

01

DeepSeek-R1原理解析及项目实践(含小白向概念解读)

01

京东智能体引擎AutoBots(JoyAgent)-多智能体引擎Genie正式开源

01

京东金融APP的鸿蒙之旅:技术、挑战与实践

添加企业微信

获取1V1专业服务

扫码关注

京东云开发者公众号